For the past 6 years I’ve run this blog on the Subtext project (an Open Source software project which is an ASP.NET blog framework). It has served me very well with being flexible and allowing me to customize things that I want. It is based on SQL Server and uses stored procedures and relational database “stuff” to accomplish the goals of the design.

Recently I saw the news from Scott Guthrie about the reduction in pricing on some Windows Azure products, introducing a 100MB pricing option for SQL Azure which you can read about here and here. I thought this would be a good time to start looking at moving some of my infrastructure of my blog “to the cloud” so I started looking at the details…after all, $5/month seemed reasonable for my database.

Now, I’m only talking about the database portion here…not the ASP.NET application. Right now I’m happy being in complete control of my own server and have no need for moving the .NET site at this time. Perhaps in the future, but given the pricing on app hosting, it just doesn’t make sense for my little site here. I have, however, had some issues with the database infrastructure over the past year with some latency, outages and just age of the server it is hosted on for my site (not the same as my web app). Because of this I was investigating SQL Azure.

I’m happy to say that this site now runs the data side on SQL Azure…but the process was not without hiccups. I wanted to share some frustrations I had so that you might be able to avoid them. My comments below are related to migrating an existing SQL Server database to SQL Azure and not creating a new one from scratch.

My environment

To set the stage, it is fair to note my particular environment for migration. My database resides on a Windows 2003 server and is a SQL Server 2005 instance/database. My web application also resides on a Windows 2003 server and is configured in a virtual LAN to have access to the database infrastructure server.

Yes I realize these are not the “latest” in server products, but I also don’t think they are too old relatively speaking. They work to my needs and I hadn’t needed any of the new features for a long while (I’ve been desiring to move to Windows 2008 and ASP.NET MVC for Subtext though).

Getting the necessary tools

I chuckled a bit at how many client tools I actually needed to complete this process of moving things to the ‘cloud’ during this process. I’ve had experience in the past moving SQL databases around using SQL Management Studio (SSME) and other script methods. I thought this would be very similar using the import/export capabilities nicely provided in SSME.

I was wrong.

In doing this I started on the Windows Azure web site for some data. Now, I clearly didn’t navigate deep enough (more on that in a moment) because I wasn’t finding what I was looking for in a “migrating an existing database to Azure” article. So I did what anyone else would do and searched the web for ‘migrate sql to azure’. I was presented with a first top choice titled Migrating Databases to SQL Azure. This provided me with a few options, but no really good end-to-end example. Truth be told, I tried a few of these and was completely frustrated because the details were not complete.

In the end I found what I needed in the following tools that were essential to me. Here were the tools that you will need:

The last 2 bullets (direct connectivity) are needed to ensure you can do this from your machine. The last one – access to your SQL Azure server – isn’t entirely intuitive that you need it set up in advance, but you do. Another thing to note is that in order for the SQL Azure Migration Wizard to work, your SSME installation must be 2008 R2 SP1. The link above is to SP1 but I could not find a download for just the SSME tool for R2 SP1, so I downloaded the full SP1 of SQL Express With Tools and just installed the management studio.

Setting up the connections

Once you configure your Windows Azure account, you’ll need to create a SQL Azure server. This initially confused (and concerned) me because I only wanted my 100MB database account and not anything that will bump up my compute time costs. However I’ve been assured this just represents the “instance” of your DB and not a compute server. So you’ll need to configure that first.

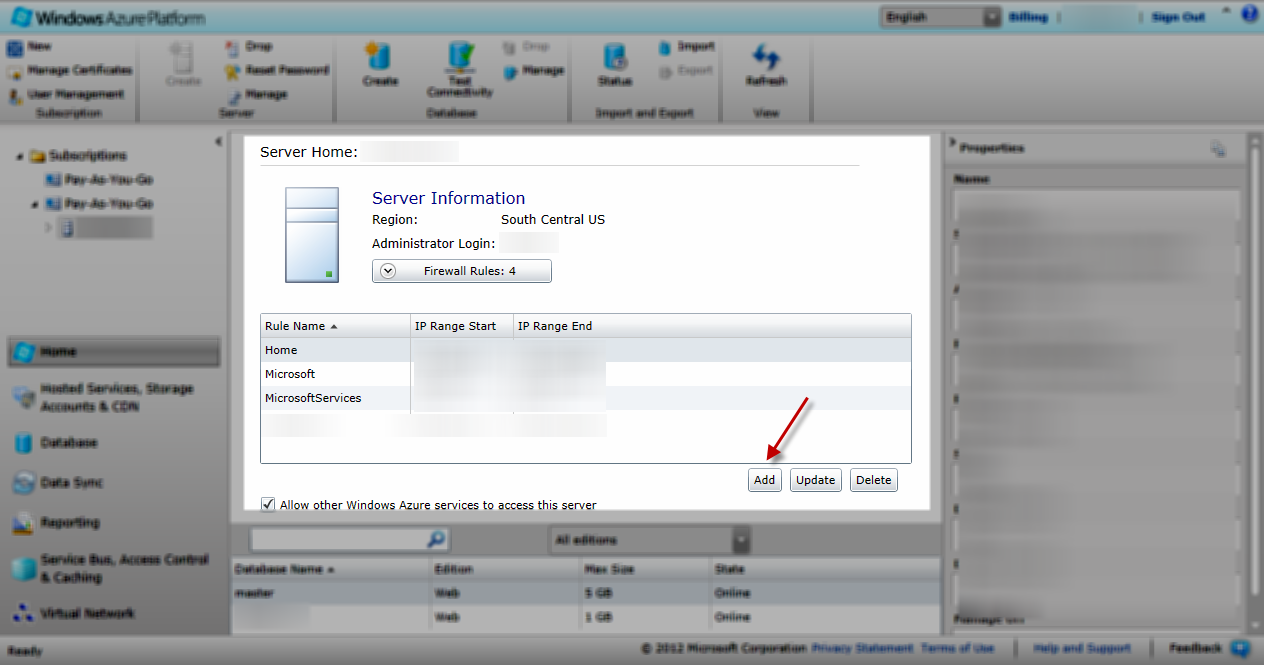

To do this you’ll login to your account at https://windows.azure.com and select the subscription server you set up for SQL Azure. You’ll then want to add a firewall rule to your server. The “Add” button shows your current IP address so you can add just that if you’d like.

This takes a few minutes to propagate so I’d do this first. Once you have this you can configure this connection in SSME to connect to. Your server name is on the right hand side of this screen (blurred for my account) and is something like XXXXXX.database.windows.net. In SSME you will connect to this as XXXXXX.database.windows.net,1433 as the server name.

Start by adding a connection in SSME for your current database and your SQL Azure server you just configured. Do not create any database yet at this time on SQL Azure.

Exporting the current database schema

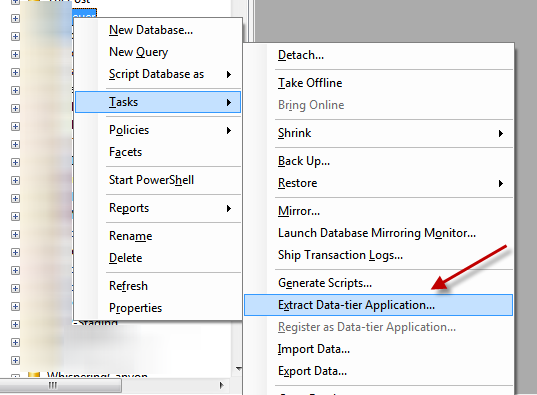

I tried a few different methods, but by far the easiest was the Data-tier Application method. To do this go to your existing database in SSME and right-click, choose Tasks and then Extract Data-tier Application:

This will create a “dacpac” file via the wizard you will be presented with. Essentially this extracts the schema and objects for you. Now why this instead of just a normal TSQL script? Your mileage may vary, but this was the only method I had real success with in my configuration.

Creating the database from the DACPAC

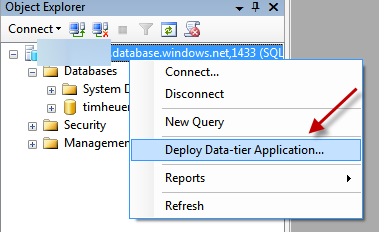

Once you have the exported .dacpac file go back to SSME and on your SQL Azure instance right-click and choose Deploy Data-tier Application:

This will create the database and schema for you, but not any data. This is another wizard that walks you through this process. Once complete you should have a new SQL Azure database matching the schema from your original one.

Migrating the data

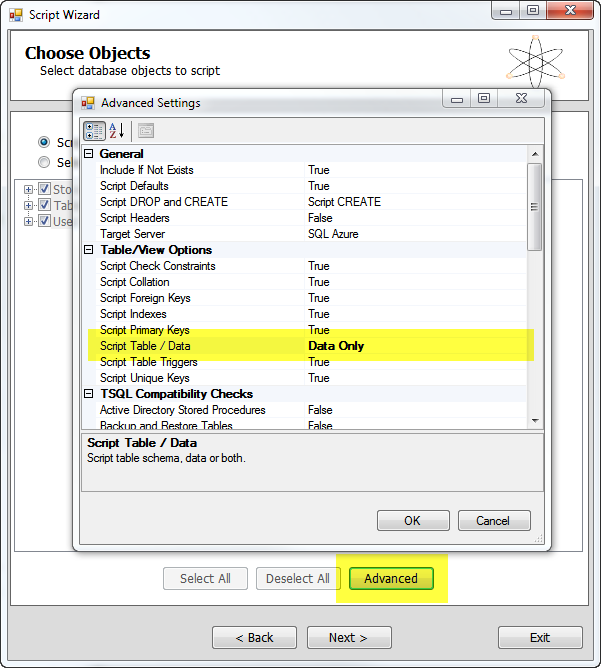

Once I had the new schema in my SQL Azure database I was ready to move the data. This is where the SQL Azure Migration Wizard comes into play. Launch that tool and you will be asked to choose a source and destination target. For the source, connect to your original database and after specifying the connection information (I chose just the db, not Master and was fine), click the Advanced button and change to Data only:

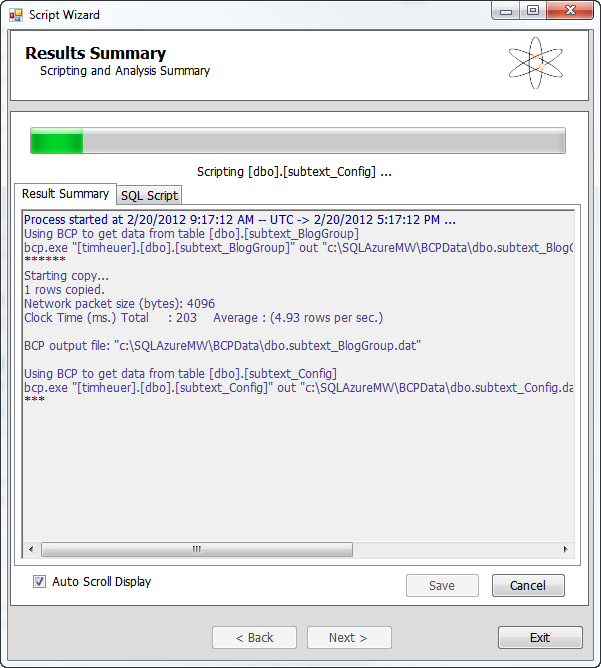

You will then start the process and notice that it is basically doing bcp.exe commands for you to extract the data:

Once this is done you will select the destination – your SQL Azure DB that was just created. Now since SQL Azure may not have the same features as your source database there may be some conflicts. The migration wizard tool will stop you on errors on the bcp import commands and give you a chance to resolve/continue those conflicts. As an example, some of my clustered indexes didn’t transfer over in the schema creation (no idea why) and I needed to re-create those before two tables could be imported. No big deal, but it was cool that the import was “paused” for me with a Retry function so that I could do this without starting all over.

Migration clean-up

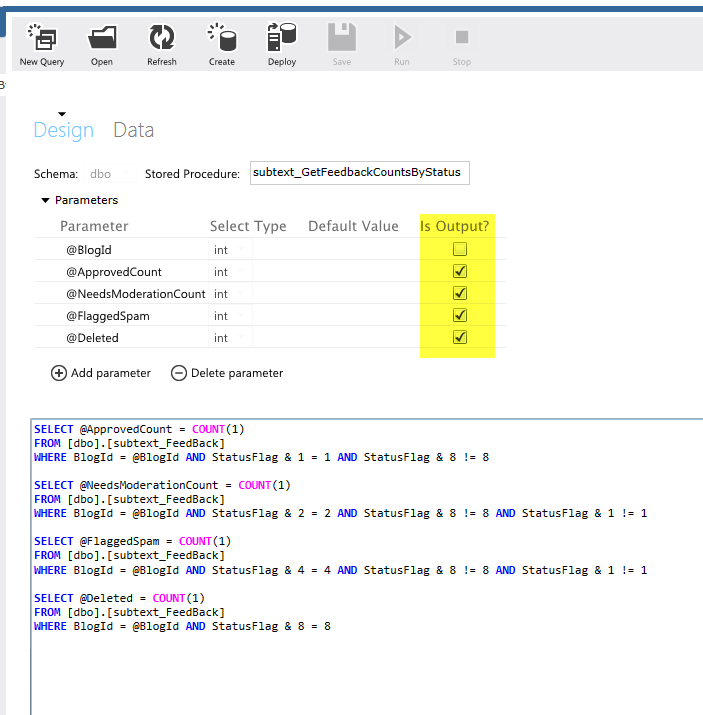

There were a few things that didn’t migrate well for my Subtext experiment here. First, even though stored procedures in my source database had correctly identified some parameters as ‘out’ it seems they didn’t transfer well. I’m not sure if this is an issue with the Data-tier Application export or something in SQL Azure, but it required me to go back and ALTER those procs with the correct flag of OUTPUT. Luckily I could actually do this through the Silverlight application for managing my database just fine after the database was configured:

Logins also didn’t transfer, but users did. My web app doesn’t use an admin user so I wanted to make sure I had a correct login for that. Through SSME connected to SQL Azure, there is not the GUI interface for doing these and you have to use all TSQL scripts. The commands in SSME when connected to SQL Azure DB will generate the template for you and you just put in the right values.

Subtext uses some system stored procedures in some admin screens that I use and sp_spacesed is not available. Luckily others have seen this and I just needed to modify some areas to use similar scripts.

General frustrations

Some of you may be looking at this and wondering why I had so much trouble. Why didn’t I just read this document (don’t you hate me for putting that last) that walked me through similar steps (minus migration wizard)? That would be a good question. That document didn’t show up in search for me anywhere and it is under the “Develop” section of the Azure information site. I didn’t think to look for as I wasn’t developing anything just yet. I only found out about that link from my friend Peter Laudati. It would have saved me some time, but not most. The first link on that site shows to download SSME R2 RTM…but the migration wizard requires SP1 and without it you’d see an error message about some missing SQL types (Smo).

Why didn’t I just use the migration wizard for schema *and* data…why two steps? That’s a good question. Frankly I don’t know why the migration wizard itself didn’t work for me for the wholesale schema+data approach. It could be my SQL2005 version or something. But for me, it just didn’t work. The steps above were the only paths that worked for me and my SQL2005 database to migrate.

Summary

While I was successful in finally migrating my database, discovering the proper steps wasn’t as in-my-face as it should be. There are pieced together areas about migrating, but the MSDN article I expected to be a more full-featured end-to-end example. I remember there was a big push for Access->SQL Server and there was an “upsizing wizard” provided from Microsoft to move that data. I wish that Azure had something more one-stop like this. The migration wizard seems like a first approach, but didn’t work as smooth as a one-stop solution for me…hopefully it does for others. It would have also been nice to have this actually integrated into the tools of the Azure portal. Let me provide a connection to my existing database and just magically create the Azure one for me – that would have been awesome.

Once my migration was complete, everything in SQL Azure is working with my app as expected and the tools are familiar to me to do any maintenance on the data that I need. I like the Silverlight management interfaces via the web that I can get a snapshot of my datbase at anytime and they even display query performance on the dashboard which is cool. I don’t have access to the log files nor am I able to run DBCC commands anymore, but I’m trusting that SQL Azure is more efficient than my own DBA skills of old and that my database will be managed effectively with regard to these items.

Hope this helps.