Making my NuGet Package more trusted with signing and doing it all in Azure DevOps Pipelines

Like most things in life, this all started with someone’s message on Twitter.

Blogged: Why NuGet Package Signing Is Not (Yet) for Me. https://t.co/4RSes2Bo4r

— Boom Haackalacka (@haacked) April 3, 2019

A close look at NuGet package signing feature, where it's useful, and in which ways it falls short.

You can code-sign a NuGet Package? I don’t know why I didn’t know that other than I figured I had no idea that I should care or have even given a second thought to it. But Phil’s article and subsequent discussion on Twitter made me just realize I should take a look at the flow. After all more sage wisdom from Phil kicked me over the edge:

There’s no harm to anyone if you do sign your package.

— Boom Haackalacka (@haacked) April 3, 2019

You’re right Phil, and how can you argue with that forehead…so let’s do this.

What is NuGet? NuGet is a package management system that was primarily developed for .NET developers and has now become a de-facto package/release mechanism for that ecosystem. What npm is to Node.js developers, NuGet is to .NET developers. More info at https://www.nuget.org.

I’ve got a little library for helping .NET developers be more productive with creating Alexa apps, Alexa.NET. When I started this project I used to just have this on my local box and would build things using Visual Studio and manually upload the NuGet package. Then people made fun of me. And I ate my sorrows in boxes of Moon Pies. Luckily I work with a bunch of talented folks and helped me see the light in DevOps and helped me establish a CI/CD pipeline using Azure DevOps. Since then I’ve got my library building, a release approval flow, automated packaging/publishing to the NuGet servers. I simply just check-in code and a new version is released. Perfect. Now I just want to add code-signing to the package. Naturally I do what every professional developer does and Google’d went to read the docs about code signing NuGet packages. Luckily there is some pretty good documentation on Signing NuGet Packages!

The first thing you need is a code-signing certificate. There are many providers of these and different prices so pick your preferred provider. I chose to use DigiCert for this one but have used other providers in the past. The process for getting a code-signing cert is a bit more than just an average SSL certificate so be sure to follow the steps carefully. Once you have that in place, export the DER and PFX versions as you will need both of these for this process. Your provider should provide instructions on how to do this for you.

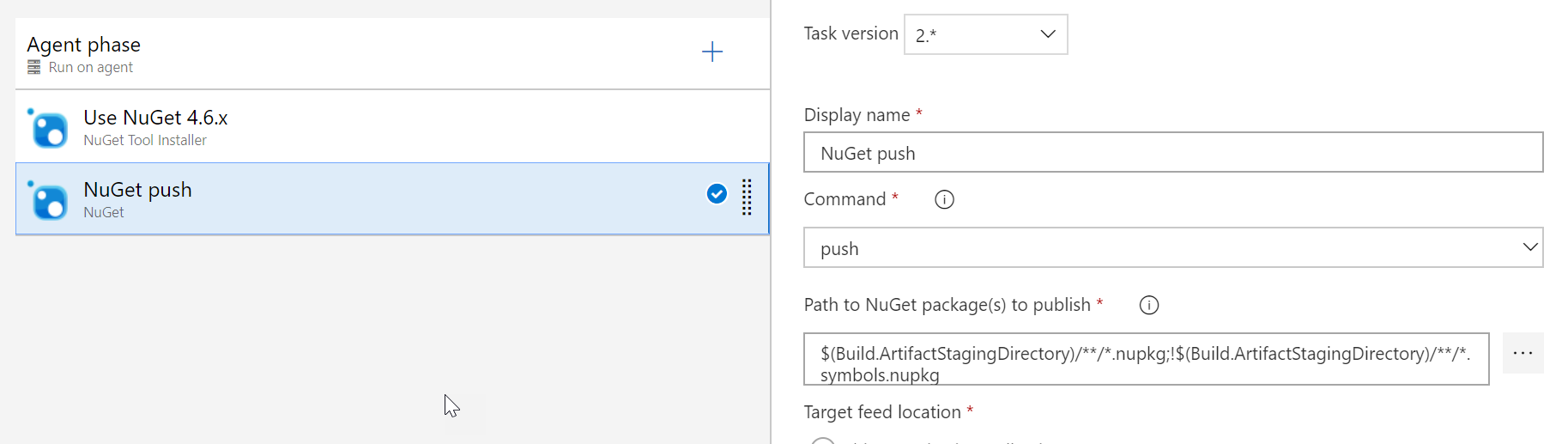

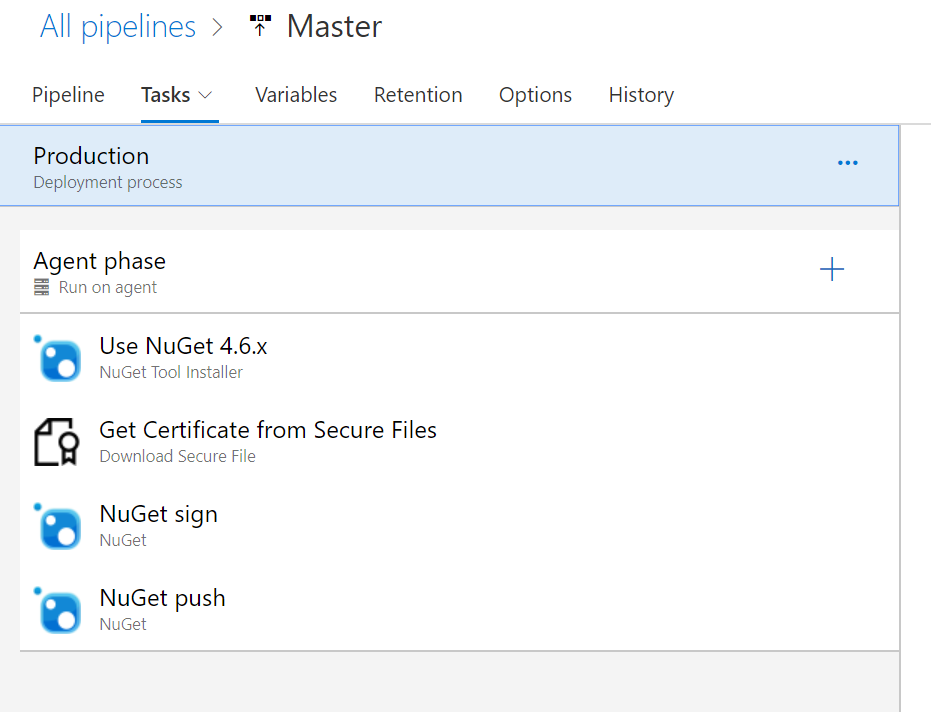

Next up was to modify my Azure DevOps Pipeline. I do my NuGet activity in a Release pipeline after a successful build and an approval step to actually complete the deployment. My simple release pipeline looks like this:

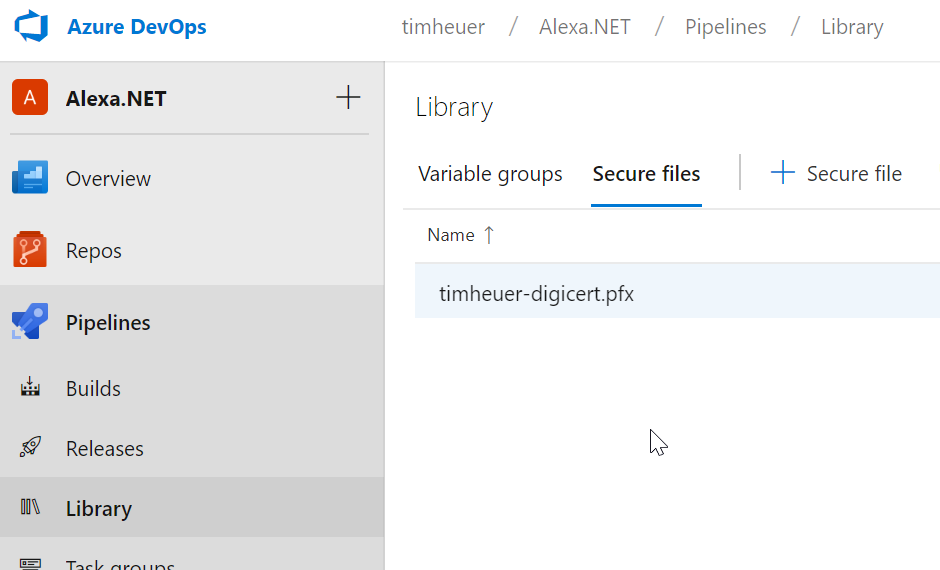

The signing is provided by the NuGet CLI and so I just needed to add another task to this flow right? I added another one in and was going to just choose the ‘sign’ command as the configured option. Well, the ‘sign’ command isn’t a selectable option in the task. There is a request to make this one of the default options for the Azure Pipeline Task right now but it isn’t in there yet. So for this we will use the ‘custom’ option which allows us to pass in any command and args. The docs already told me the commands that I would need: a certificate file being the minimum I would have to have. Hmm, how am I going to have a certificate file in my CD pipeline?! As it turns out there is a secure file storage in Azure Pipelines I can use! This allows me to upload a file that I can later reference in a pipeline using an argument. Remember that PFX file we exported? In your DevOps project under Pipelines there is a ‘Library’ menu option. Going there takes you to where you can upload files and I uploaded my PFX file there:

The next thing I need to do is also provide my password for that exported PFX file (you did export it with a password right!). To do this I made use of variable groups in Azure DevOps, created a group called CertificateValues and added my name/value pair there, marking the value as a secret. As a variable group I can ‘link’ this group to any build/release definition without explicitly having the variable in those definitions. This is super handy to share across definitions. You can now link to an Azure KeyVault for secrets (more to come on that in a part 2 blog post here). I’ve got my code signing cert (PFX) and my certificate password stored securely. With these two things now I’m ready to continue on my definition.

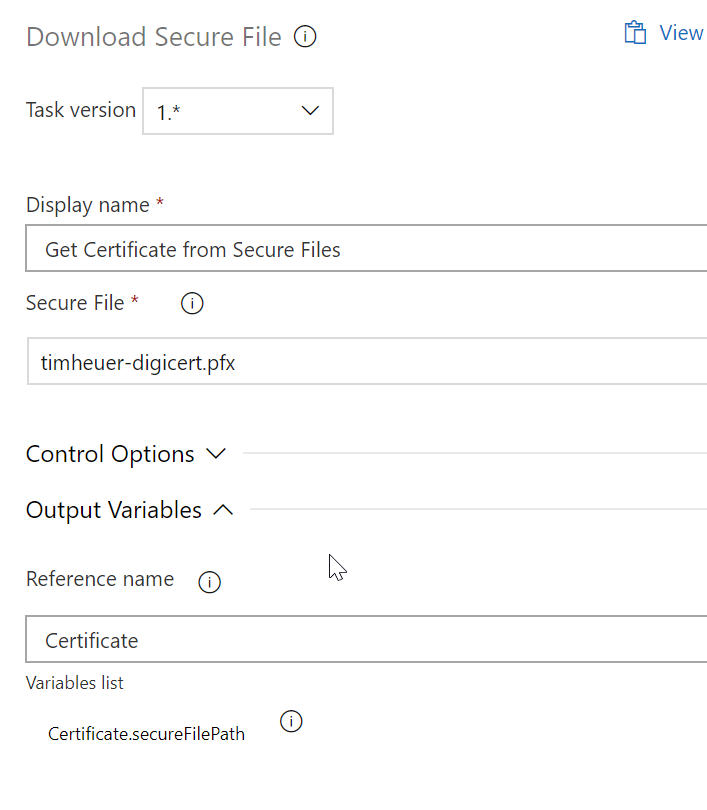

Now how will I get the file from the secure storage?! As I read in the docs, there is a Download Secure File task that I can add to my pipeline. The configuration asks me what file to use and then in the Reference Name of the Output Variables area I give it a name I can use, in this case ‘Certificate’:

This variable name allows me to use it later in my definitions as $(Certificate.secureFilePath) so I don’t have to fiddle around guessing where it downloaded to on the agent machine. Now that we have that figured out, let’s move back to the signing task…remember that ‘custom’ one we talked about earlier. In the custom task I specify in the Command and Arguments section the full command + arguments I need according to the docs. My full definition looks like this:

sign $(System.ArtifactsDirectory)\$(Release.PrimaryArtifactSourceAlias)\drop\*.nupkg

-CertificatePath $(Certificate.secureFilePath)

-CertificatePassword $(CertificatePassword)

-Timestamper http://timestamp.digicert.com

To explain a bit I’m using some pre-defined variables System.ArtifactsDirectory and Release.PrimaryArtifactSourceAlias to help build the path to where the drop folder is on the agent machine. The others are from the secure files (Certificate.secureFilePath) and variable group (CertificatePassword) previously defined. These translate to real values in the build (the secret is masked in the logs as shown below) and complete the task.

Here was my log output from today in fact:

2019-04-04T19:10:44.4785575Z ##[debug]exec tool: C:\hostedtoolcache\windows\NuGet\4.6.4\x64\nuget.exe 2019-04-04T19:10:44.4785807Z ##[debug]arguments: 2019-04-04T19:10:44.4786015Z ##[debug] sign 2019-04-04T19:10:44.4786248Z ##[debug] D:\a\r1\a\_Alexa.NET-master\drop\*.nupkg 2019-04-04T19:10:44.4786476Z ##[debug] -CertificatePath 2019-04-04T19:10:44.4786687Z ##[debug] D:\a\_temp\timheuer-digicert.pfx 2019-04-04T19:10:44.4786916Z ##[debug] -CertificatePassword 2019-04-04T19:10:44.4787190Z ##[debug] *** 2019-04-04T19:10:44.4787449Z ##[debug] -Timestamper 2019-04-04T19:10:44.4787968Z ##[debug] http://timestamp.digicert.com 2019-04-04T19:10:44.4789380Z ##[debug] -NonInteractive 2019-04-04T19:10:44.4789939Z [command]C:\hostedtoolcache\windows\NuGet\4.6.4\x64\nuget.exe sign D:\a\r1\a\_Alexa.NET-master\drop\*.nupkg -CertificatePath D:\a\_temp\timheuer-digicert.pfx -CertificatePassword *** -Timestamper http://timestamp.digicert.com -NonInteractive 2019-04-04T19:10:52.6357013Z 2019-04-04T19:10:52.6357916Z 2019-04-04T19:10:52.6358659Z Signing package(s) with certificate: <snip to remove cert data> 2019-04-04T19:10:52.6360408Z Valid from: 4/4/2019 12:00:00 AM to 4/7/2020 12:00:00 PM 2019-04-04T19:10:52.6360664Z 2019-04-04T19:10:52.6360936Z Timestamping package(s) with: 2019-04-04T19:10:52.6361268Z http://timestamp.digicert.com 2019-04-04T19:10:52.6361576Z Package(s) signed successfully.

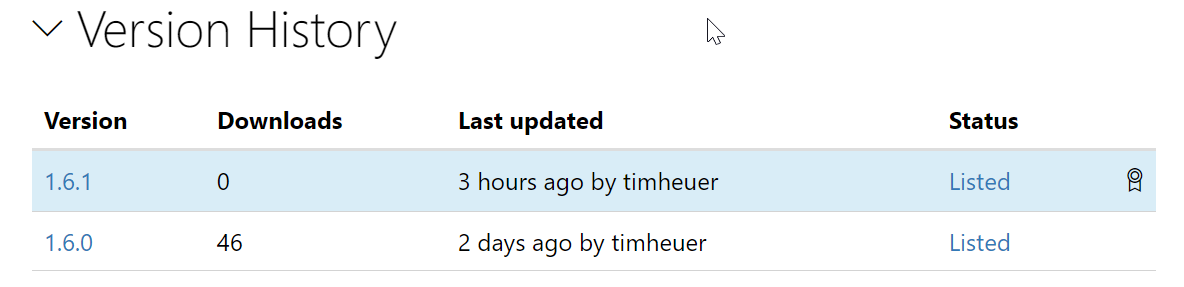

Done! Some simple added tasks and reading a few docs to get me having a signed NuGet package. Now re-reading the docs on signed packages I have to upload my certificate to my NuGet profile to get it to be recognized. This time I only need to provide the DER export. Once provided and my package is published, I get a little badge next to the listing showing me that this is a signed package:

This was a good exercise in helping me learn a few extra steps in Azure DevOps working with files and custom task variables. Immediately as I was doing this, my friend Oren Novotny couldn’t help but chastise me for this approach.

I am

— Oren Novotny (@onovotny) April 3, 2019

So stay tuned for a secondary approach using Azure KeyVault completely to complete this without having to upload a certificate file.