Migrating from .NET 3.5 to ASP.NET Core 3

| CommentsReflecting back on this blog I realized it’s been 16 years of life here. It hasn’t always been consistent content focus on tech over the early years versus a more random outlet of my thoughts (and apparent lack of concern over punctuation and capitalization). Some months had more volume and as my career (and perhaps passions) changed some had lower volume.

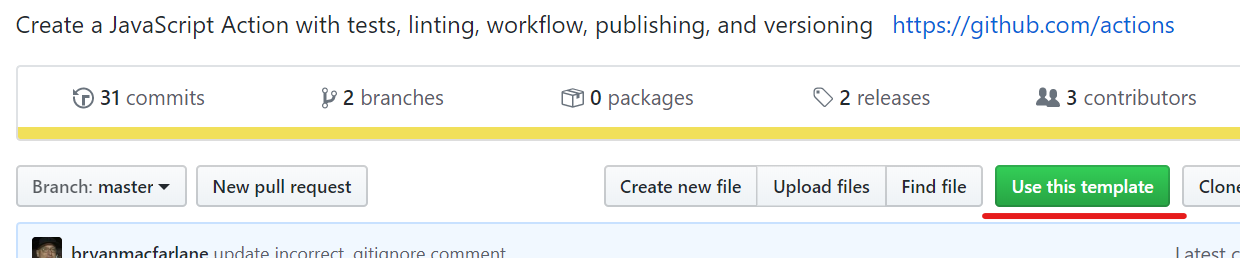

I’ve enjoyed getting back in to posting more recently and finding more time (and again, perhaps the passion) to do so. I think also with newer various outlets of social media, my personal passions are posted elsewhere now like Instagram (if you want to follow my escapades on the bike mostly). Recently this summer in 2019 I switched job roles at Microsoft back into program management with the .NET team. I’m focusing on a few different things but having spent so much time in UI frameworks on the client side for so long, I missed some waves of changes in ASP.NET and needed to re-learn. I spent the first month of my new role doing this and exploring the end-to-end experiences. Instead of building a To-do app, I wanted to have some real scenario for me to work with so I set off to migrate my blog…it was time anyway as just that week I had received warnings on my server about some errors. I could avoid this no longer. It wasn’t an easy path, but here was my journey.

Existing website frameworks

My blog started in 20-Aug-2003 and was built using Community Server (from Telligent) initially. That quickly forked into a product called .TEXT from Scott Watermasysk and I moved to using that as it was solely content management and not forums or other things I didn’t need. I stayed on .TEXT for as long as it lived until again another fork happened. This time the .NET ecosystem around Open Source was improving and this fork was one of those projects. Phil Haack, along with others, created SubText which was initially a pretty direct fork, but quickly evolved. I wasn’t much interested in the code at this point, but wanted to follow ‘current’ frameworks so I moved to SubText. All along this path it was easy because these migrations were similar, using the same frameworks and similar (if not same) data structures. The SubText site was an ASP.NET 2.0 site using SQL Server as the data store. Over time this moved to .NET 3.5 but not much more after that (for me at least).

Over time I made a few adjustments to the SubText environment for me, never really concerning myself about the source, but just patching crap in random binaries that I’d inject into the web.config. My last one was in 2013 to support Twitter cards and it was a painful reminder at the time that this site was fragile. By this time as well the SubText project itself was fragile and not really being maintained as ASP.NET had moved to newer things like MVC and such. The writing was on the wall for me but I ignored it.

Hosting environment

In addition to the platform/framework used, I was using an interesting hosting setup. Well, not abnormal really considering at the time in 2003 there was no ‘cloud’ as we know it today. I had a dedicated box (1U server) hosted at my own data center (I was managing, among other things, a data center rack at the time). This was running Windows Server 2000 and whatever goop that came with. Additionally this was SQL Server Express [insert some old version here]. I had moved on to another job and after a period of time I needed to move that server. I was using the server for more than just my site, running about 10 other WordPress blogs for my community, my wife’s business, and various other things. WordPress sites were constantly being attacked/hacked due to vulnerabilities in WordPress and leading to my server being filled with massive video porn files and me not knowing until my site was down then I had to login remotely and clean crap up. Loads of fun. I eventually moved that server to a co-located environment at GoDaddy still maintaining a dedicated 1U server for me. It was nice having direct access to the server to do whatever I wanted, but I was quickly not needing that type of hands-on configuration anymore…but still dealing with the management.

During each of these moves I was just moving folders around. I had no builds, no original source code reliably, etc. “Fixing” things was me writing new code and finding interesting ways to redirect some SubText functionality as I didn’t have the source nor was interested in digging up tooling to get the source to work. I never upgraded from Windows 2000 server and was well beyond support for things I was doing. When I wanted to upgrade the OS at GoDaddy, I was faced with “Sure we’ll set up a new server for you and you migrate your apps” approach. So again I was going to have to re-configure everything. Another nightmare waiting and I just put it off.

To the Cloud!

My first step was moving data to the cloud. I wrote about this when I did this task back in 2012. I quickly learned that if my site wasn’t on Azure as well and with the traffic I was still getting, the egress costs were not going to be attractive for me. A few years ago I went about moving just my blog app to Azure App Service as well. Not having anything to build, this was going to be a fun ‘deployment’ where I needed to copy a lot of things manually to my App Service environment. I felt dirty just FTP-ing in to the environment and continually trying things until it worked. But it eventually did. I had my .NET Framework SubText app running on App Service and using Azure SQL. The cool thing about Azure SQL is the monitoring and diagnostics it provides. I immediately was met with a few recommendations and configuration changes I should make and/or it automatically made on my behalf. That was awesome. I did have one stored procedure from SubText that was causing all kinds of performance havoc and contributing to me hitting capacity with my chosen SQL plan requiring me to bump up to the next plan and more costs. Neither of which I wanted to do. And due to what I mentioned prior about not having SubText buildable I couldn’t reliably make a change to the stored proc without really changing the code that called it. Just another dent in the plan. I needed a real migration plan.

Migrate to ASP.NET Core

I mentioned that in my new role I needed to spend time re-learning ASP.NET and this was a perfect opportunity. I decided to dedicate the time and ‘migrate’ to ASP.NET Core. Why the air quotes? Because realistically I couldn’t migrate anything but data. I did not have reliably building source for SubText and despite that it was WebForms and I didn’t know what I might be getting myself in to. I needed a new plan, which meant a new framework and I went looking. Immediately I was met with recommendations that I should go static sites, that Jekyll and GitHub pages are the new hotness and why would I want anything else. I don’t know, for me, I still wanted some flexibility in the way I worked and I wasn’t seeing that I’d be able to get what I want out of a static site approach. I wanted to move to ASP.NET Core solutions and found a few frameworks that looked attractive. Most were in varying states and others felt just too verbose for my needs. I landed on a recommendation to look at miniblogcore. This was the smallest, simplest, most understandable solution to my needs that I found. No frills, just render posts with some dynamicism.

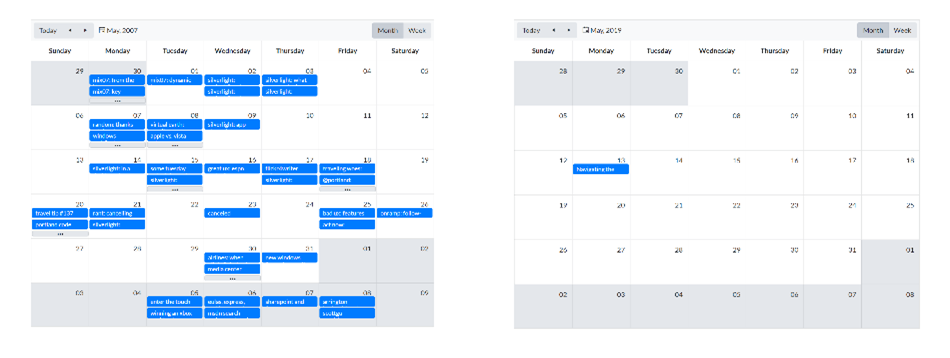

I did not even attempt to migrate any of my existing ASP.NET WebForms code or styling as modern platforms were using Bootstrap and other things to do the site, so that was where I needed to start. I spent a good amount of time working on the simple styling structure for a few things and learning MVC in the process to componentize some of the areas. I added a few pieces of customization on the miniblog source, adding search routing (using Google site search), a timeline/calendar view thanks to Telerik controls, category browsing (although mine is horrendous due to waaay too many categories used over the years), using Disquss for commenting, adding SyntaxHighlighter for code formatting support, an image provider for my embedded images during authoring, and a few other random things. I wrote a little MVC controller to do the data migration once from SQL to the XML file-based storage that MiniBlog uses. That was a lot simpler than I thought it would take to migrate the data to the new structure. Luckily my old blog had ‘slug’ support and this new one had it as well, so the URI mapping worked fine, but now I had to ensure the old routing would work. I had to play around with some RegEx skills to accomplish this but in the end I found a pattern that would match and implemented that in my routing, using proper redirect response codes:

// This is for redirecting potential existing URLs from the old Miniblog URL format

// old subtext non-slugged & slugged

// https://timheuer.com/blog/archive/2003/08/19/145.aspx

// https://timheuer.com/blog/archive/2015/04/21/join-windows-engineers-at-free-build-events-around-the-world-xaml.aspx

[Route("/post/{slug}")]

[Route("/blog/archive/{year:regex(\\d{{4}})}/{month:regex(\\d{{2}})}/{day:regex(\\d{{2}})}/{slug}.aspx")]

[HttpGet]

public IActionResult Redirects(string slug)

{

// if the post was a non-named one we need to append some text to it otherwise it will think it is a page

// if (slug doesn't contain letters) { redirect to $"post-{slug}" }

var newSlug = slug;

var isMatch = Regex.IsMatch(slug, "^[0-9]*$");

if (isMatch) newSlug = $"post-{slug}";

return LocalRedirectPermanent($"/blog/{newSlug}");

}

That ended up being remarkably simpler than I thought it would be as well. This alone was causing me stress to maintain the URIs that had existed over time and using ASP.NET routing with RegEx I got what I needed quickly.

Moving to Azure App Service once this was all done was simple. When I first moved ASP.NET Core 3.0 wasn’t yet available so I had to deploy as a self-contained app. This isn’t difficult though and in some cases may be more explicitly what you want to do. I wrote how to Deploy .NET apps as self-contained so you can follow the steps. This basically is a ‘bring the framework with you’ approach when the runtime might not be there. Azure App Service now has .NET Core 3.1 available though so I no longer have to do that, but good to know I can test future versions of .NET by using this mechanism.

Summary

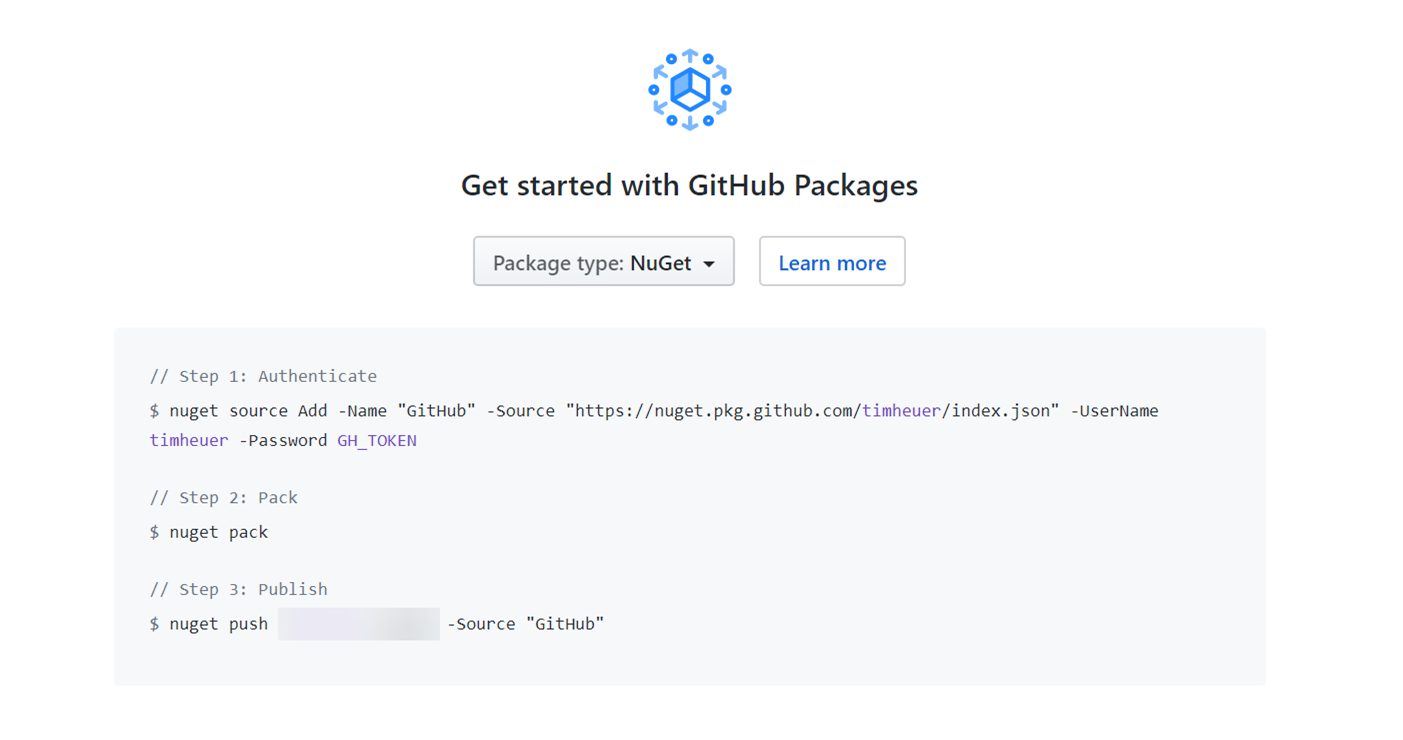

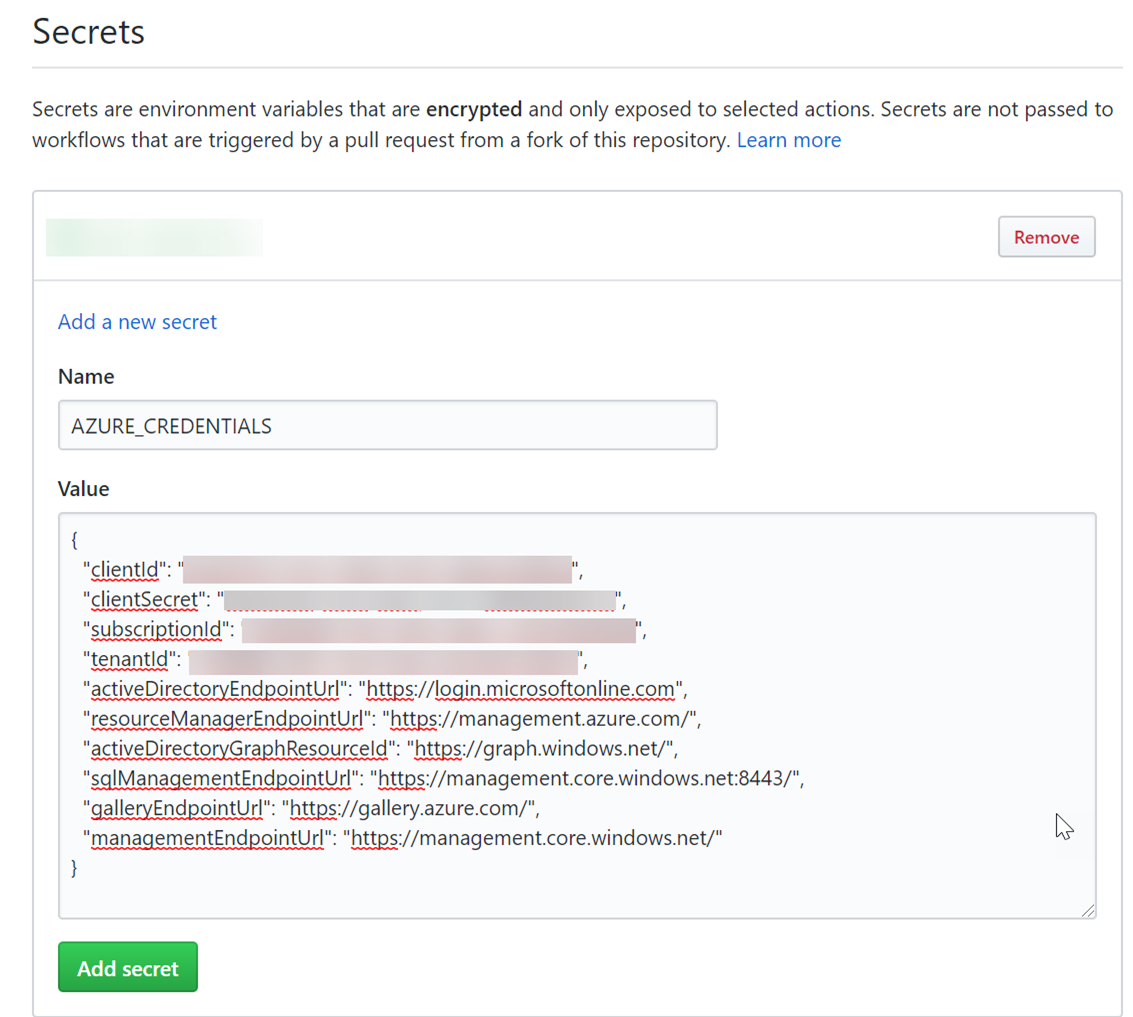

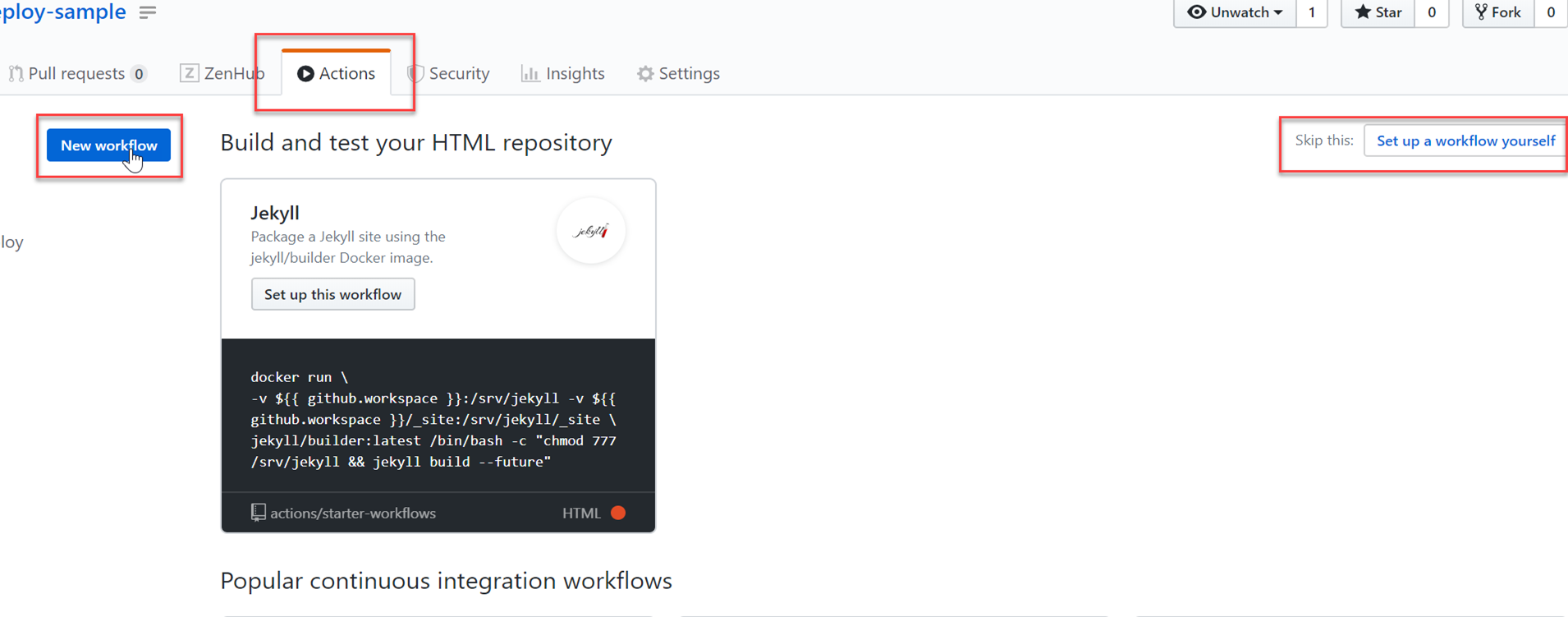

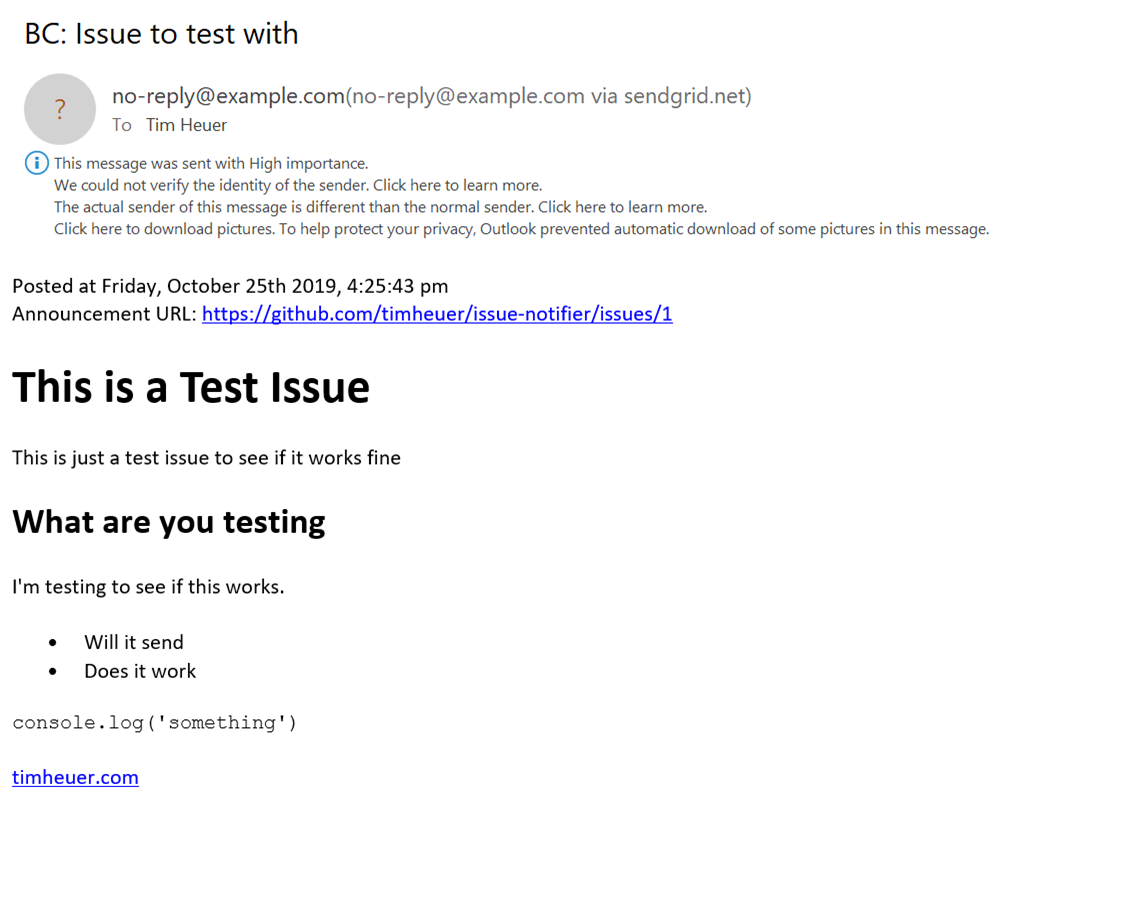

So what did I learn? Well, not having source for your apps you care about hurts. I didn’t even get a chance to actually attempt to truly migrate SubText to ASP.NET Core because I had let my implementation rot for so long. I have become such a huge believer in DevOps now it’s unreal. I won’t do a simple project even without it. The confidence you gain when your projects have continuity through automation is amazing. My new blog app is fully run on DevOps and deploys using that as well…I just commit changes and they are deployed when I approve them. I learned that even though this was ‘just a blog’ it was a fairly involved app with separation of user controls and things. It didn’t need to be so complex, but it was and I’m glad for MiniBlog not being so complex. The performance of my content site and costs are much more manageable now and my stress is reduced knowing that should anything happen I’m in a better place for restoring a good state. My biggest TODO task I think is re-thinking the XML-based data store though. This actually is the one thing causing me some DevOps pain because the ‘data’ is content within the web app and when using slot-staging deployment that doesn’t work well. Azure has a way to use Azure Storage as a mounted point to serve content from in your App Service though and I’ve started to try that with some mixed results so far. Using this approach separates my app from my data and allows for more meaningful deployment flows and data backup. I’ve also explored using an Azure Storage provider for my data layer, but the method for how the initial cache is build in MiniBlog right now makes this not a great story due to startup latency when you have 2,000 posts to retrieve from blob container. I’m still playing around with ideas here, so if you have some I’d love to hear (dasBlog users would hit similar concerns).

I’m happy with where I landed and hope this keeps me on a path for a while. I’ve got a simple design, responsive design, easy-to-maintain source code, all the features I want (for now), no broken links (I think), works with my editing flow (Open Live Writer), and less stress worrying about a server. I’ve already updated to ASP.NET Core 3.1 and it was a simple config change to do that now that my setup is so streamlined.

What are your migration stories?